Resilience and Adaptation to Advanced AI

When model safeguards fail, what's our backup plan? AI resilience is crucial for preparing for the widespread diffusion of increasingly powerful AI.

It’s 2035, and open source developers are training an AI system expected to be capable of automating cyber vulnerability detection, biological design, and more. If its safeguards fail, or are purposefully circumvented, what defence would we have against these widespread, potentially dangerous capabilities?

AI resilience is our ability to adapt to changes brought about by deploying advanced AI systems. It prompts us to look outside of AI models themselves, and to identify ways to adapt our societal systems and infrastructure to novel risks introduced as advanced AI diffuses.

AI resilience complements other approaches to AI safety, such as those that modify or restrict certain AI capabilities to lessen their potential danger. But why can’t we solely rely on these ‘capability-focused' interventions, and what makes AI resilience necessary as well as helpful?

The Need for Societal AI Resilience

Advanced AI systems are rapidly diffusing across society, and we’re quickly discovering more applications for this technology. Whilst many uses promise to be beneficial, we’re already experiencing the impact of novel, harmful applications of AI systems. High-schoolers use open source AI systems to humiliate women and girls by creating non-consensual deepfakes. Employers risk amplifying racial biases by integrating LLMs into their CV screening processes. Hackers may use LLM-based AI agents to assist with cyberattacks, by detecting previously unknown cyber vulnerabilities.

Given the potential scale and diversity of impacts that advanced AI might have on society, we need to act to ensure its benefits ultimately outweigh these harms.

Traditionally, we might try to prevent harmful usage by having AI models refuse to carry-out malicious requests, or by monitoring inputs and outputs to a closed-source model. However a broader approach is necessary, since model-focused approaches like these faces three key challenges:

Advanced AI is getting cheaper to develop, making it harder to keep tabs on multiple actors. Hardware innovation and algorithmic advances mean compute is cheaper, and you need less of it to achieve a given level of performance (Pilz, Heim & Brown 2023). This could mean small actors and even individual citizens could produce potentially dangerous models in the future. Controlling who builds which AI systems in such a world seems undesirable, and infeasible to prevent every actor from developing dangerous capabilities and being able to make them accessible to the general population (especially if you consider the number of jurisdictions you’d have to cover).

Model safeguards aren’t failsafe, meaning harms can slip through the net. Safeguards that make models less likely to produce harmful outputs are neither mandatory nor failsafe. Some developers choose to deploy AI systems without safeguards to preserve model quality, whilst others’ safeguards can be cheaply removed. This is especially true of open-weight models, however closed-source models have been jailbroken (for example, many-shot jailbreaking), or could be stolen if weights are not secured.

Model capabilities are inherently dual-use, making it hard to cleanly restrict harmful capabilities without impacting beneficial use-cases (Anderljung & Hazel 2023; Narayanan and Kapoor 2024). Consider that cyber capabilities help cyberattackers detect vulnerabilities, as well as cyber defence companies. Unfortunately, it’s difficult to tell whether the balance lies in favour of offence or defence (Lohn and Jackson 2022; Garfinkel and Dafoe 2019). This uncertainty facilitates a tense debate between groups favouring free-release versus restrictions, as each feel they have valid arguments that their position is the most risk-reducing. Since this is a largely a battle of ideology rather than empirics, we continually run the risk of making net-negative deployments, or creating net-negative regulation, without a strong empirical case either way.

Adaptation offers a potential route forward: by understanding the context about who is using an AI system and what infrastructure is at risk, we can hope to curtail harmful use-cases of an AI capability whilst leaving its beneficial use-cases untouched.

How would we achieve such a balance? The good news is, we’ve done it a number of times with other technologies. The bad news is AI presents unique challenges in terms of the pace and ubiquity of its diffusion.

Adaptation’s Historical Successes

In many ways, adaptation isn’t a novel proposal or an alien approach to dealing with technological risks. Consider the diffusion of motor vehicles throughout the 20th century. As numbers of motor vehicles rose, sadly, so did road accident deaths. However deaths began to fall from around 1970, despite the number of vehicles continuing to grow.

Why did road deaths start to decrease? It certainly wasn’t because cars were getting any slower. Deaths were reduced through a number of societal and technological interventions, including adaptation.

On the technical side, crumple zones, seatbelts and airbags made it less likely that accidents would be fatal. Simultaneously, societal adaptations involved road safety awareness campaigns, enforcing speed limits, and changing societal norms around drink-driving laws. In 1965, complaints about car safety peaked, demanding change. Today, it seems much more likely that the benefits of motor vehicles outweigh their downsides, thanks to societal adaptation.

What is Adaptation to Advanced AI?

AI resilience is our capacity to adapt to advanced AI’s diffusion. But what is adaptation in the context of AI?

Adaptation in AI is part of a dual-approach to risk management. To strike a more familiar analogy, consider our response to climate change. We aim to reduce emissions to curtail climate change at its source. We also adapt to mitigate potential harms from climate change that happens despite our best efforts to prevent it - like building flood defences and changing which crops we grow. Adaptation tends to involve changing our social systems and infrastructure - in this case so that a changing climate has a lessened negative impact on infrastructure and, resultantly, people’s wellbeing.

In AI, we try to make AI deployments safer by applying safeguards to the models themselves, during development. We should also expend effort into societal adaptation: handling remaining risks that come with the diffusion of AI capabilities, despite our best safeguarding efforts. Adaptive interventions tend to take effect outside of the AI model itself, and instead targets actions downstream of the model (e.g. pertaining to a specific use-case, or type of harm).

Formalising Adaptation

I and my colleagues give a more formal definition of AI adaptation, in a recent paper (see figure below). The first column tracks a five-step progression by which an AI system could have a negative impact on the world. At the second level, it identifies types of intervention at each step. Many approaches to AI safety aim to stop dangerous capabilities from diffusing in the first place, thus only involving the grey boxes in the diagram below.

By calling for adaptation, we highlight the need for an increased focus on the latter three intervention types (in yellow): avoiding potentially harmful use of that AI system, defending against the initial harms caused by that use, and remedying the downstream impacts of that harm.

Example: Adapting to Spear Phishing Risk

Say that a hypothetical AI system had the necessary capabilities to largely automate the sending of convincing spear phishing emails for financial scams. What would we do to prepare for its release?

The framework helps us identify adaptation interventions at each point in the causal chain:

Avoidance: in the first place, we can try to stop misuse or reduce the likelihood of accidental harm. This could look like passing and visibly enforcing laws relating to the use-case, or increasing our technical capabilities to detect misuse and increase the chance of reprimand.

Defence: assuming some potentially harmful use happens, try to prevent the user from causing harm like a user’s credentials being stolen. In the spear phishing example, this could look like improving spam filters. A number of defensive actions are non-technical: we could also defend against spear phishing by increasing public awareness that it could happen to them, making people more scrutinous.

Remedy: assuming the initial harm happens (someone’s credentials are stolen), we can still take action to curtail some of the negative impact stemming from that harm. Hypothetically, this could look like insurance or compensation schemes for victims, that recovers some or all of the money they lose, at a later date.

What is AI Resilience?

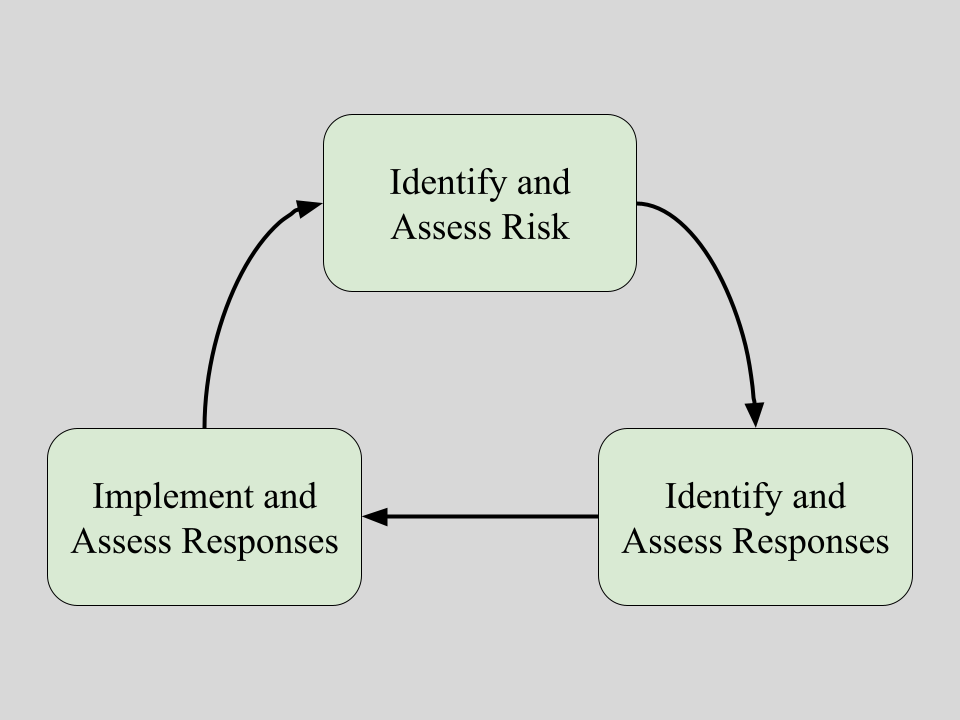

In practice, adaptation to advanced AI has to be carried-out by real people at real institutions and organisations. Adaptation is a continuous process, and institutions involved in it will perform one or more parts of it. Our resilience is our collective capacity to carry out the following adaptive cycle:

Identifying and assessing risks means things like evaluating capabilities and monitoring the impacts of already-deployed AI systems on societies and systems. Secondly, identifying appropriate responses will often involve innovation, and sometimes capital to iterate on potential technological solutions. For example, we’ll have to develop defensive technologies like automated vulnerability patching or detecting disinformation. We may also have to design complex policies and remedial schemes, which account for technical details about AI capabilities. Finally, organisations have to actually implement those responses at the appropriate scale. This can involve scaling a technology, or making consumers aware that they will have to make use of new tools or change their behaviour to adapt to advanced AI.

Achieving AI resilience is a society-scale effort. It requires establishing efficient information sharing and other types of cooperation between organisations, and the talent and financial resources to identify risks and implement defensive technologies. We elaborate on other recommendations in the paper.

Subscribe for more AI Resilience

Future posts in this series will delve into exactly how we achieve resilience. I’ll cover topics like:

‘Defensive accelerationism’

Who’s responsible for which parts of AI resilience?

Real-world initiatives and events that build resilience