AI Generated Misinformation is Still a Risk

Half the world's population voted in 2024, relatively unaffected by AI generated deepfakes. Can we conclude that AI generated misinformation isn't the risk we thought it was?

In early 2024—a year which would see nearly half the world’s population head to the polls—many feared that widespread AI generated misinformation and deepfakes would dupe voters, undermining democracy. The World Economic Forum ranked misinformation and disinformation among its gravest global risks, warning it could "radically disrupt electoral processes."

Yet as ballot boxes were sealed and votes tallied, elections appeared to have proceeded without major incidents (mostly - more on this later). Commentators have noted that the impact of AI generated misinformation was unclear, and far short of the most apocalyptic scenarios. Some commentators have gone so far as declaring the ‘disinformation panic is over’ entirely (not just for AI).

While I agree with many of the individual claims in these assessments, I think their tone too readily dismisses AI misinformation risks. They neglect evidence that disinformation can and has had acute impacts on people’s actions, and that AI could dramatically increase cost-effectiveness of disinformation campaigns. Premature dismissal of the potential impact of AI disinformation could lead to dangerous inaction to changes brought about by cheap and widespread AI tools.

This post offers a more nuanced perspective, between the two extremes: that AI won’t impact electoral integrity; and, an AI-generated misinformation apocalypse — which some authors chose as their target to refute. It will point out adaptions which are still necessary to deal with the most acute disinformation risks.

Evidence against AI-enhanced influence is flimsy

As a brief recap, the core concerns about AI-generated misinformation centre on three factors: AI’s the low cost of operation, its persuasiveness, and its rapid scalability.

Critics dismissing these concerns rely on evidence that proves inadequate under careful examination:

It over relies on researcher-gathered datasets when identifying trends or the absolute scale of problems.

Its comparisons to existing means of generating synthetic media are shallow. Photoshop and human-operated bots do already exist, but this neglects the economics of slashing the cost of content creation.

It overly focuses on the demand-driven element of misinformation, failing to consider that increasing ease of supplying misinformation could cause acute, counterfactual harm (for example, the last-minute deepfake during the Slovakian election).

1) Researcher-gathered datasets don’t tell the whole story

Researchers have documented instances of AI generated disinformation into incident databases (1, 2, 3). One argument I’ve seen dismissing AI disinformation risk is pointing out these datasets’ contain few instances of AI generated disinformation.

While useful for qualitative research, these datasets capture only reported or discovered instances, missing undetected cases such as those shared through private channels, or those that fall outside researchers’ purview.

For example, the FT reports “The Alan Turing Institute identified just 27 viral pieces of AI-generated content during the summer’s UK, French and EU elections combined.” - however their search strategy involved only gathering instances that were reported in the news media.

By extension, another mistake is using these datasets to comment on the relative rates of different types of AI generated misinformation. AI SnakeOil points out "To our surprise, there was no deceptive intent in 39 of the 78 cases in the [WIRED Elections Project] database" - claiming therefore that intentional deception is not that common. This measure is essentially irrelevant as evidence of the degree of AI misinformation risk: what of the ~50% of examples, some of which were intended to deceive? I argue that AI generated disinformation could still become much more frequent (2), and deceptive media can and does have an impact (3).

2) AI Transforms the misinformation economy

Focusing on instances of intended deception, critics often argue that bad actors can already create fake content using existing tools like Photoshop. This comparison alone fails to address how AI tools could fundamentally change the economics of disinformation, which could have two primary effects:

Sophisticated actors (like nation states) could achieve greater personalisation, scale and speed at lower costs. While these actors are already well-resourced, AI could uplift their activities.

Amateur actors who lack advanced skills or resources (like trolls, or small groups with political aims) can now generate convincing, deceptive content. This could lead to more attempts at disinformation - some of which may break through - even if these isolated attempts are on average the least sophisticated.

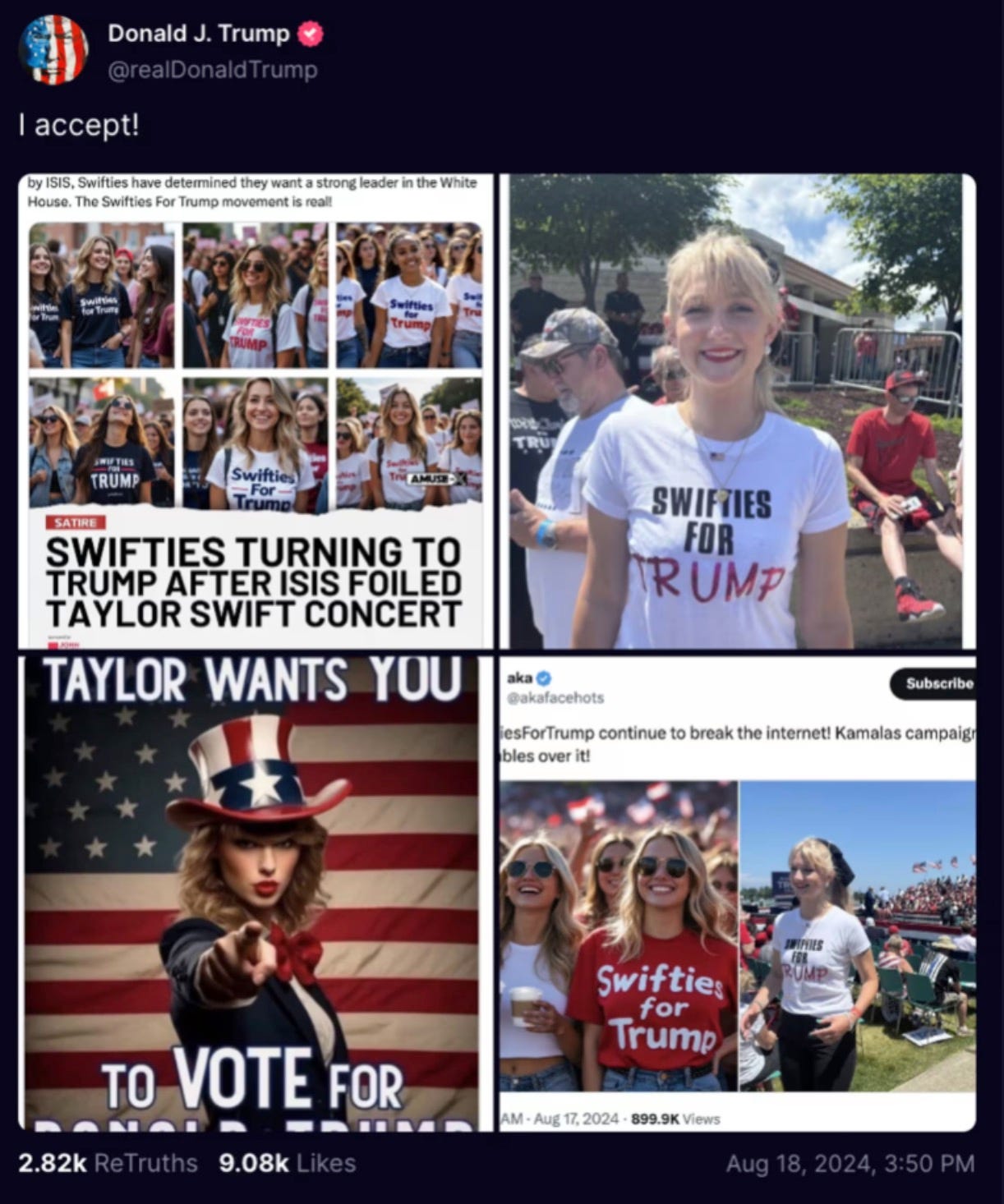

Trump’s use of a Swifties for Trump deepfake (image below) illustrates the latter point, leaving aside its impact for a moment: while these images could have been photoshopped before accessible AI tools existed, would it have been made in that world? It’s hard to say in this particular instance - but quite implausible that the lower barrier to entry wouldn’t change behaviour.

The Centre for Long-Term Resilience’s report on disinformation explores other potential effects.

Beyond images: AI persuasion

The current discourse of AI misinformation focuses on AI-generated images. Optimistically, most people will already be aware of AI image generation, and could be developing a healthy skepticism of images they see online; I think these will be the least impactful vectors of misinformation going forward.

Another significant threat lies in automated persuasion at scale. Language models can generate highly convincing arguments - even when users know they’re speaking to an AI system - and businesses and politicians are already seizing the opportunity to use AI to personalise communications with voters. Used with integrity, AI tools could legitimately increase political engagement. Used irresponsibly, more ethically dubious copycats selling similar tools could increase the ease and granularity with which inconsistent political messaging can be targeted. We’ve already seen granular and inconsistent advertising via social media, throughout 2010 elections. AI tools could go beyond demographic-level targeting, and invent persuasive arguments in real time for specific users with specific questions.

3) Supply of misinformation has real-world effects (despite misinformation being primarily demand-driven)

The misinformation field has recently shifted to recognise that misinformation - AI generated or otherwise - is demand-driven. That is, misinformation is consumed and even created by people with existing disaffected views who want information that feeds their appetite, rather than it being the cause of their disaffection. Misinformation is increasingly being viewed more and more as a symptom of deeper issues.

While this shift in mindset seems valid, it has led some commentators to overcorrect, concluding “the disinformation panic is over”. This overcorrection risks neglecting real, acute impacts of large scale, effective misinformation campaigns. It’s important to remember that misinformation supply can cause harm too.

A recent interview with Trump discusses one such concern - that a convincing, well-timed deepfake could cause a world leader to take fast, decisive action. Misinformation has increased tensions between Israel and Pakistan in this way, as Pakistan’s defence minister reacted aggressively to a fake article describing Israeli aggression. While the truth was eventually discovered in that instance, and words wound back, it’s likely the world was left a little bit worse for this event. Neither of these cases need to have been AI generated, but I expect AI could make such occurrences more frequent (at least for a time).

Recent, real-world events continue to demonstrate that well-targeted disinformation campaigns can have significant real-world impacts. The recent Romanian election provides a stark example of the potential impact of digital interference. After a last-minute candidate went on to lead the first round of elections, Romanian intelligence services reported finding almost 100,000 TikTok accounts promoting him, allegedly orchestrated by Russia. These circumstances led to the unprecedented step of the Romanian government cancelling the election.

While the full investigation is ongoing - and it’s presently unclear what role AI played if any - this incident demonstrates how scaled digital campaigns can and have significantly impacted elections. Similar levels of interference could be made more common by AI tools, which make disinformation campaigns more economically feasible by reducing the need for human operatives. It’s imaginable that it already played a role in this case.

A more trivial case demonstrates that misinformation can have real-world impacts even when there’s no clear pre-existing view held by those it affected. In Birmingham, England, online misinformation led thousands of people to gather for a non-existent firework display. Incidentally, reporters claim AI-generated articles and social media posts played a part in perpetuating the rumour.

Misinformation is highly complex, and even experts find it difficult to determine whether or to what extent misinformation undermines electoral integrity - let alone AI generated disinformation. However it’s clear that convincing disinformation, and intentional influence operations, can have a real, counterfactual impact on the world.

The path forward: practical adaptations to AI-generated disinformation

While addressing the underlying demand for misinformation remains crucial, we must also adapt to AI's transformation of information creation and distribution. Consider society’s response to climate change: we reduce emissions but, because that’s difficult, we also invest in adapting to its effects. Analogously, we must improve institutional trust, but also adapt to changes AI will bring to the information landscape.

It’s difficult and undesirable to rein-in AI capabilities to mitigate misinformation. Instead, we need to think about how to transform the information landscape to deal with the potential for widespread AI generated information.

The table below lists a few adaptations, which we described in more detail in this paper. As one example, so that users of social media know they’re speaking to other humans, human verification practices may need to step-up for operating social media accounts. While this is costly, it may be less costly than wading through hundreds of compelling bots to find human users.

Conclusion

In 2024, we didn't see a misinformation apocalypse, but we're still witnessing the early stages of AI's integration into our information ecosystem. The inability to precisely measure AI disinformation's impact on events like the New Hampshire primary or Slovakia's election shouldn't lead to complacency.

We need to maintain a dual approach: addressing the root causes of misinformation demand while adapting to AI's transformation of information supply. The risk isn't over - it's still evolving.