A Policy Agenda for Defensive Acceleration Against AI Risks

The same AI capabilities underlie benefits and harms. How can we assure AI safety without curtailing beneficial applications? Defensive acceleration charts a possible course.

Introduction

AI systems are becoming more capable across a variety of tasks, such as uplifting cybercriminals, accelerating state-backed influence operations, and automating job applications thus flooding recruitment processes. Without major defensive action, continued capability improvement and widespread diffusion of AI systems could disrupt the workings and integrity of our societal systems across the board.

Managing AI risks, however, faces a fundamental conundrum: AI capabilities are ‘dual-use’. Intervening to reduce the potency of a harmful capability also hinders its beneficial applications. For instance, blocking requests to detect cyber vulnerabilities in websites would equally hinder an attacker and a defender. Should a system accept this request? What effect would that have on cybersecurity overall?

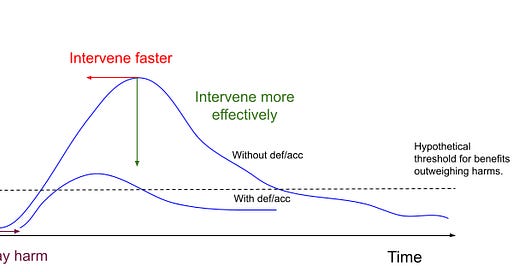

The growing movement behind defensive acceleration aims to address this conundrum. Defensive interventions aim to curtail harmful uses of AI systems whilst leaving upstream AI capabilities relatively untouched, thus liberated for beneficial use-cases. For example, if we could somehow ban social media bots run by AI systems, it wouldn’t matter that AI is capable of generating divisive content. Acceleration is about helping those interventions happen faster. The general concept of defence is popular with technology optimists, since it charts a course for continued technological progress whilst mitigating risks. But will defences work to protect society from AI’s greatest risks?

I begin to evaluate that question by offering a definition of defensive acceleration, based on my earlier work on societal adaptation and resilience. I emphasise that - unlike in other definitions - defensive interventions don’t need to be technological. I’ll then discuss why it should form part of our AI risk response by highlighting its strengths and limitations, and finally present a policy agenda that would support the defensive acceleration movement.

Defensive acceleration policies increase public visibility into AI harms, build capacity to intervene defensively, and manage the introduction of risk-increasing technologies. By adopting these policies, we can improve our ability to intervene defensively and enjoy AI’s benefits with reduced levels of harm.

Defining Defensive Acceleration

Defence is a very old concept, and so too is defensive acceleration. The Mozi were the first government to adopt a policy of defensive acceleration in the 5th century BC.

In the era of large language models, defensive acceleration has been discussed in a few ways. The first is through differential technological development, which defines defensive technologies as those decreasing the severity or likelihood of a particular risk from a risk-increasing technology - without modifying the technology itself. The agenda calls for government funding, technology regulation, and philanthropic research to bring forward in time safety-boosting technologies, and delay risk-increasing ones. More recently Vitalik Buterin coins ‘d/acc’ in his techno-optimism agenda, which he defines as accelerating all of defensive, decentralised, democratic and differential technologies.1 Finally, Entrepreneur First has launched a def/acc programme, supporting technology entrepreneurship that promotes growth, democratic values, vibrant and open institutions, and human agency and capability.

A commonality between these definitions is that defensive interventions occur downstream of technological advances (in our case, that’s after the development and deployment of AI capabilities). They contrast with - and complement - interventions that modify or gate upstream AI capabilities, such as safeguarding (e.g. refusals of harmful requests), deployment corrections, and calls for pauses and moratoria to ‘make AI systems safe’. Instead, defence takes AI capabilities as they are, and defends against novel, potentially harmful use-cases.

These existing discussions of defensive acceleration have already proven helpful by charting a positively-valenced course for technological development, inspiring programmes such as def/acc. However, the discussion is currently more directional than precise. To begin to develop a policy agenda for defensive acceleration, and evaluate its merit, I’ll start by offering a definition. Building on my previous work on Societal Adaptation to advanced AI, I propose the following definitions:

Defensive Intervention: an intervention that reduces harm that materialises downstream of a risk-increasing technological deployment.

Therefore I define defensive acceleration as the following:

Defensive Acceleration: bringing forward in time defensive interventions, relative to the risk-increasing technologies they defend against.

This definition implies a number of new ideas about accelerating defensive technologies:

Scoping interventions as downstream of a technological deployment means assuming the risk-increasing technology is already deployed. In AI’s case, the technology might be a foundation model like GPT-4. Other interventions might intervene on the model, but defensive interventions don’t. This is similar to Sandbrick et al.’s definition of defensive technology, which doesn’t modify the risk-increasing technology.

Focusing on harm instead of offence or attack means we are defending against both intentional misuse of a technology, and unintended side-effects of intended use. For example, a foreign state might undermine democracy through automated influence campaigns, or citizens may receive faulty information from querying LLMs. Both could be addressed by defensive acceleration.

The intervention doesn’t need to be technological. To defend against political deepfakes, we could build technology that identifies AI-generated content, or we could raise public awareness of the existence of deepfakes, making it less likely that people believe what they see without checking more trusted sources.

Focusing on relative acceleration means we can bring forward defensive interventions and delay certain risk-increasing technological applications. For example, we might bring forward the availability of cyber vulnerability detection capabilities to defenders, whilst relatively delaying their public availability. Analogously, we take a relative approach to climate defence by delaying climate change through policies like phasing out diesel vehicles, using the time bought to implement adaptations.

This definition more precisely conceptualises defensive acceleration for the purpose of AI risk management. Other related concepts often accelerate technologies for a broader set of goals, which interact complexly with AI risk management and sometimes contradict it. Consider ‘decentralising’ AI models - one of the 4 ‘d’s in Buterin’s d/acc, of which another is ‘defence’. Decentralisation through open sourcing AI weights has real benefits for research and innovation, but also accelerates access to potentially dangerous capabilities - potentially causing a deceleration in defence.

Importantly, defensive acceleration is a complement to other approaches - not a replacement. It’s generally advisable to manage risk through defence in depth, rather than silver-bullet solutions. Later, I’ll specifically highlight the gaps that defensive acceleration doesn’t fill.

Examples of Defensive Interventions

These are not the only ways to reduce risks downstream of technological deployment: they’re just ones that I would label as ‘defensive’ under the previous definition. Many other risk mitigation strategies can fall under what I’d categorise as avoidance or remedy. For example, harm from car crashes can also be reduced by implementing and enforcing speed limits. Similarly, hiring discrimination can be mitigated through avoidance bias audit laws, which decrease the chance that harmful systems are deployed in advance.

These are not mutually exclusive categories: defensive interventions often also have an ‘avoidance’ effect - especially for malicious use cases; if an attacker feels less likely to be successful because of strong defence, they’re less likely to attack in the first place.

Strengths of Defensive Intervention

Defensive interventions are particularly useful when harmful usage is impossible to fully avoid through other risk management strategies. Defensive interventions are appropriate for defending against intentional misuse and unintentional harms from non-malicious usage, and are often amongst the most politically feasible.

Defence may be the only strategy against malicious actors who have already decided to act outside of the law. Deterrence interventions are less effective for motivated threat actors. Whilst model safeguards can help to reduce egregious misuse, growing access to AI systems and open weight models make safeguards less reliable. For example, AI-assisted cyber attacks can only be defended against by patching vulnerabilities before they’re exploited. State-backed, AI-assisted influence campaigns can be defended against through detecting and responding to AI generated content, and by raising public awareness (and through human verification methods). However, defence doesn’t always work against AI misuse: misuses between individuals, like using existing, open weight AI models to produce non-consensual, deepfake pornography are more difficult to defend against. Deterrence still plays an important role, for some misuse cases.

Unintended harms are usually best addressed by combining defensive interventions with avoidance and remedial strategies. Whilst laws and standards help reduce accidental harms by non-malicious actors, defence may be particularly useful when AI-powered services could disrupt our existing societal systems and institutions. For example, AI-generated content could flood existing societal systems which rely on low volumes (e.g. automating job applications); AI tools could introduce societal-level discrimination through services like CV screening and in general, releasing AI agents on today’s internet could disrupt current online services. Each of these cases is complex, and requires domain-specific context to mitigate potential problems and harms. However, defence will often be part of the approach for harm reduction. Some general defences for accidental harms might include implementing stringent human-verification checks in systems we don’t want agents to be able to navigate, and technologically enabling scalable human supervision of AI agents and decision making processes.

Defensive interventions are politically feasible. Implementing defences often requires motivation, not legislation (which is currently difficult to pass). Defensive interventions can be incentivised by the market when entrepreneurs are aware of an opportunity, e.g. to build cyber defensive technology, or businesses are aware of a risk.

Limitations to defensive interventions

Defence can’t be relied upon to reduce all AI risks, however. By discussing defence in other contexts, we can see it has some limitations.

Defence alone rarely reduces risk to zero. Our defences against today’s largest threats haven’t been tested. For most risks, a lack of motivation has been our best defence. Nation states likely don’t conduct major cyber attacks against NATO countries because it’d be classified as an act of war, not because they can’t. Nuclear weapons are not fired due to mutually assured destruction, not because they’d be shot down.2 Engineered or procured viruses are not purposefully released because bioterrorists are not sufficiently numerous, skilled or motivated.

Many harms persist despite defensive strategies. Despite spam detection technology, phishing is still at the root of the majority of cyber attacks. Pandemics are notoriously difficult to defend against, and bots have been a problem since the beginning of social media. Which AI risks are most difficult to defend against is a big question, requiring domain-specific analyses. However, it’s likely that deterrence, safeguarding and avoidance are important parts of any risk mitigation strategy.

With very few exceptions, risk-increasing technology arrives first, and we intervene defensively in response. Fundamentally, defensive interventions must be designed with actual threat models in mind: we need to know what could go wrong to defend against it. Our most proactive defences have still used very concrete threat models, or kernels of the larger problem they’re trying to address. For example, RSA cryptography (which keeps internet data transfers private) was developed before the public internet existed. Whilst private email networks were common, the inventors of RSA acted proactively to adapt existing cryptographic methods for a foreseen, public internet. The resulting paper carries one of the best openers of all time: “the era of “electronic mail” may soon be upon us”.

Fortunately, many AI risks involve scaling existing problems through automation, as in spear-phishing, conducting cyberattacks and powering disinformation campaigns via AI-backed social media bots. Envisioning these problems at increased scale might enable sufficient defensive foresight to increase the scale and nature of our defences in time. However AI may also present novel risks, like AI agents running amok on the internet. Given AI’s rapid diffusion, there’s a risk that it could lead to drastic harm or irreversible changes before defences are developed. It’s therefore imperative that we manage the introduction of harmful technology, and deploy defensive interventions as quickly as harms emerge. I’ll discuss how in the next section.

Finally, we must consider how actors respond to defences. First, defences can cause risk compensation: people can take more risks when they feel a sense of protection. According to one economist, drivers compensated for improvements in car safety at the time by driving more dangerously - though the effect size has since been disputed. It’s almost always better to implement a defence than not to; however, it is worth considering how any given defence will affect behaviour. For example, automatic code scanners could cause programmers to check for vulnerabilities less carefully.

These limitations leave room for alternative approaches, such as safeguarding AI systems, and employing strategies for avoiding and remedying harms. Finally, Sandbrick et al. (2021) also raise the concept of substitute and low-risk alternatives to risky technology - for example, preferring procedural algorithms might be preferred to generative AI approaches for decision making, as they’re more predictable.

A Policy Agenda for Defensive Acceleration

Defensive Acceleration is about bringing forward defensive interventions, relative to risk-increasing technologies they defend against. If we think this is a good approach for managing AI risk, how could we use public policy to accelerate defensive interventions?

I suggest a defensive acceleration policy agenda means enacting policies that:

Gain strategic visibility. To identify the right interventions, we first need to identify what the harms are and what the right intervention is.

Build capacity to intervene. To develop the interventions, we need the capacity to build defensive technology, or implement other interventions.

Afford time to intervene. Defensive acceleration means bringing forward defensive interventions relative to risk-increasing technologies. We might not need to slow down frontier capabilities, but there are ways to deploy them that can benefit defenders over attackers.

1. Gaining Strategic Visibility

If we don’t understand how harm happens, we can’t intervene. Therefore defensive acceleration starts with gaining visibility - and the faster we gain visibility, the faster we intervene.

Pre-deployment evaluations and AI capability demonstrations make a good start towards strategic visibility, giving us directional evidence about downstream risks from deployment of AI capabilities. In the best case, this allows us to act with foresight - before real-world harms have emerged at a detectable scale. For example, demonstrations that AI systems can exploit zero-day vulnerabilities gives justification to proactively increase investment in cybersecurity.

However not all threats are likely to be predictable ahead of time, in scale or nature. We therefore need to pair pre-deployment evaluations with actual observations of AI systems interacting with the world through post-deployment monitoring. This involves gathering information about the integration of AI, its usage, and observing its impacts and potential incidents. Tracking this information in real-time allows us to act quickly, but today we have very few mechanisms for post-deployment monitoring.

A defensive acceleration policy agenda would increase our strategic visibility by:

Investing further resources into incident monitoring and reporting processes. A key to intervening defensively is to notice harms as soon as they emerge.

In other industries, incident monitoring has worked best when learnings are extracted immediately. Therefore companies deploying AI systems should adopt internal incident monitoring and reporting processes to learn from early deployment mistakes, and avoid future over-reliance on AI systems.

Furthermore, governments measuring AI risks centrally should adopt AI-specific incident monitoring processes in order to quantify and understand AI harms (CSET, CLTR).

Establishing information channels between developers of AI models and applications and governments, and sharing information publicly on a limited basis.

Beneficial information includes:

AI Misuse patterns and abuse rates. Building a picture of how AI systems are misused could inform defensive interventions, or further investigation into particular AI capabilities.

User demographics. Understanding who uses AI for which purposes, and how different use cases grow, helps inform civil society’s areas of focus. For example, there is little information about the rise of AI companionship.

Information could be gathered through:

Voluntary agreements, following the successes of agreements between AI developers and AISIs for model access.

Legislation that mandates responses to certain information requests from AI model and application developers.

Staged rollout could be employed to help AISIs understand how new capabilities and models are used in practice (Seger et al. 2023). Agreements between governments, companies and consumers could be brokered to facilitate close collaboration during staged rollout, or developers could commit to reporting on the rollout of new capabilities unilaterally.

Promoting technical AI governance methods that increase visibility of AI systems. For example, governments can encourage AI developers to implement watermarks, content provenance, and AI agent identifiers through standard setting, directly funding their development or, most strongly, incentivising them through technology-forcing regulations.

Conducting risk assessments to identify, analyse and evaluate risk. Risks need to be assessed by AI experts at the level of foundation model deployment, and by domain experts during the adoption of new AI tools. For example, we can identify and assess the potential impact of a particular AI tool in critical settings like healthcare and courts, or understand how safety mechanisms in cybersecurity and biosecurity might be undermined by new AI-powered tools.

2. Building Capacity to Intervene Defensively

Once harms are identified, we need the capacity to intervene.

Defensive interventions are sometimes technical, like building a new cybersecurity tool, and other times more social, like raising public awareness of automated spear-phishing attempts. In either case, interventions are best designed by domain experts, since it requires a deep understanding of specific AI harms in their domain. However, those experts need to be equipped with knowledge about how AI capabilities are changing their domain, and require the resources to intervene.

Defensive acceleration policy can increase our capacity for intervention by:

Government can directly fund domain experts to develop interventions. It can fund research similar to DARPA’s AIxCyberChallenge and other grants programmes, or create advance market orders and purchase commitments for certain defensive technologies with measurable results (e.g. a benchmark for automated vulnerability patching). This funding model is important for public good technologies - i.e. when nobody is incentivised to fund it.

Governments can stoke existing market incentives through technology-forcing regulations coupled with strategic regulatory certainty, and circulating information about novel risks to entrepreneurs. When information gathered in model evaluations is sensitive for commercial or security reasons, governments may need to coordinate its disclosure.

AI developers have funded certain defensive interventions. For example, the Cyber grants programme at OpenAI may somewhat be for the public good, and may align with commercial interests to promote its systems with technologists.

Governments, AI developers and defenders can work together to accelerate defence-boosting initiatives, such as through granting early access to advanced AI capabilities to defenders. For example, by giving advanced vulnerability detection capabilities to defenders first, cyber vulnerabilities could be patched before they’re exploited.

Education bodies should ensure that AI basics are taught. For example, social sciences should understand AI influence campaigns and gain practical experience with generating AI disinformation. Biologists should evaluate AI systems’ capabilities in their own domain. Business development graduates should understand the strengths and limitations of generative models for process automation.

3. Afford Time to Intervene Defensively

Accelerating defence means bringing it forward relative to the development of risk-increasing technologies. Because defence is usually reactive, and AI systems diffuse rapidly, defensive acceleration policies have to include deploying risk-increasing AI capabilities responsibly, allowing the time and information flows necessary to identify novel harms and address them before they’ve grown out of control.

Affording time to defend doesn’t necessarily mean slowing down AI progress, but it does mean introducing novel capabilities carefully, and with ingenuity. Defensive acceleration policies can include:

Granting entities using AI for defensive purposes with early access to afford more time to build defences. Further still, governments could cooperate with AI developers during gradual or staged access of novel AI systems, to begin building strategic visibility into any novel societal risks.

Giving privileged access to specific capabilities, for different users. Similarly, AI developers may develop systems with strengths in specific applications (e.g. biology, cybersecurity, persuasive writing), and only make those capabilities available to trusted actors. This doesn’t affect the average user, but could hinder attackers.

Favouring APIs for new releases rather than open source, at least initially. With APIs, deployed capabilities can be modified or restricted from certain users, and companies can monitor the inputs and outputs for signs of harm or unexpected misuse. Liability - extending to misuse of open weight models - could encourage companies deploying novel capabilities to use APIs, or legislation could specify a length of time for which a model needs to be gated.

Exercising care in releasing open weight models, with techniques like tamper-resistant safeguards, weights and watermarks, and unlearning risky knowledge. These reduce the potential for misuse, though will incur a misuse-use tradeoff.

Conclusion: Accelerating Defensively

Advanced AI systems have the potential to change all aspects of our society, from decision making at work, to cyber attacks and the way we interact with information online. Defensive acceleration is a necessary strategy for mitigating these risks without stifling beneficial uses, by focusing on interventions that address harms downstream of technological deployments.

We’ve seen however that defence is no silver bullet. Due to AI’s rapid diffusion, defence is unlikely to happen quickly enough to address severe AI risks without concerted, additional effort. Furthermore, humanity has a track record of reactive defence over proactive defence - a particular challenge since AI systems diffuse rapidly, leading to potentially rapid scaling of AI-assisted harms.

In this post, I highlighted how policy can accelerate defensive interventions. It will be necessary for policymakers to build foresight and strategic visibility into AI risks across domains, build across-domain capacity to process that information and design effective defensive interventions, and introduce risk-increasing technologies cautiously. With these efforts - and similar efforts across industry and all of society - we can significantly accelerate our ability to defend against AI risks.

Closing Exercise

Consider the invention of RSA cryptography. Private email networks existed, soon to be made public. RSA’s inventors realised that privacy would be key on the public internet, so set to work looking for a solution. RSA is one of a few defensive technologies we invented proactively. Its success is owed to forecasting the risks that would ensue from a scaled-up, public version of an existing email system.

What effects will AI systems have in your domain? For example, could automation scale certain threats? In this eventuality, what defences might be necessary and practicable?

Thank you to Lennart Heim, Bart Jaworski and Eddie Kembery for helpful comments on this piece.

Buterin’s post was written as a riposte to the reactionary ‘e/acc’ movement, which itself was a riposte to ‘EA’ agenda which were interpreted to focus on bans and moratoria. I see this as coincidental, rather than key to the concept of defensive acceleration, since defensive acceleration has been a risk management strategy for millenia, much longer than any of these other concepts.